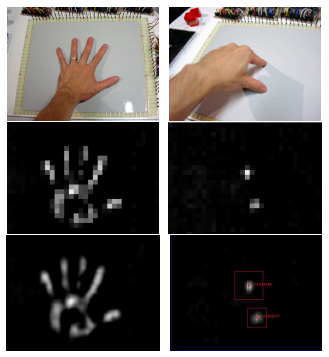

2002: Mutual capacitive sensing in Sony's SmartSkin Using the Sony SmartSkin.

In 2002, Sony introduced a flat input surface that could recognize multiple hand positions and touch points at the same time. The company called it SmartSkin. The technology worked by calculating the distance between the hand and the surface with capacitive sensing and a mesh-shaped antenna. Unlike the camera-based gesture recognition system in other technologies, the sensing elements were all integrated into the touch surface. This also meant that it wouldn't malfunction in poor lighting conditions. The ultimate goal of the project was to transform surfaces that are used every day, like your average table or a wall, into an interactive one with the use of a PC nearby. However, the technology did more for capacitive touch technology than may have been intended, including introducing multiple contact points.

How the SmartSkin sensed gestures.

Sony Computer Science Laboratories, Inc.

Jun Rekimoto at the Interaction Laboratory in Sony's Computer Science Laboratories noted the advantages of this technology in a whitepaper. He said technologies like SmartSkin offer "natural support for multiple-hand, multiple-user operations." More than two users can simultaneously touch the surface at a time without any interference. Two prototypes were developed to show the SmartSkin used as an interactive table and a gesture-recognition pad. The second prototype used finer mesh compared to the former so that it can map out more precise coordinates of the fingers. Overall, the technology was meant to offer a real-world feel of virtual objects, essentially recreating how humans use their fingers to pick up objects and manipulate them.

2002-2004: Failed tablets and Microsoft Research's TouchLight

A multitouch tablet input device named HandGear.

Bill Buxton

Multitouch technology struggled in the mainstream, appearing in specialty devices but never quite catching a big break. One almost came in 2002, when Canada-based DSI Datotech developed the HandGear + GRT device (the acronym "GRT" referred to the device's Gesture Recognition Technology). The device's multipoint touchpad worked a bit like the aforementioned iGesture pad in that it could recognize various gestures and allow users to use it as an input device to control their computers. "We wanted to make quite sure that HandGear would be easy to use," VP of Marketing Tim Heaney said in a press release. "So the technology was designed to recognize hand and finger movements which are completely natural, or intuitive, to the user, whether they're left- or right-handed. After a short learning-period, they're literally able to concentrate on the work at hand, rather than on what the fingers are doing."

HandGear also enabled users to "grab" three-dimensional objects in real-time, further extending that idea of freedom and productivity in the design process. The company even made the API available for developers via AutoDesk. Unfortunately, as Buxton mentions in his overview of multitouch, the company ran out of money before their product shipped and DSI closed its doors.

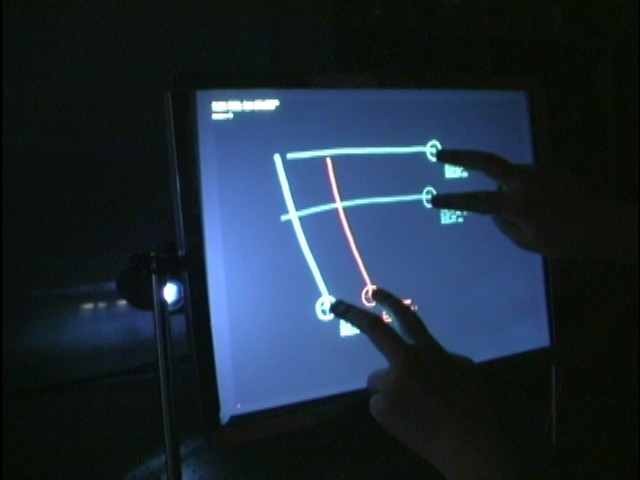

Andy Wilson explains the technology behind the TouchLight.

Two years later, Andrew D. Wilson, an employee at Microsoft Research, developed a gesture-based imaging touchscreen and 3D display. The TouchLight used a rear projection display to transform a sheet of acrylic plastic into a surface that was interactive. The display could sense multiple fingers and hands of more than one user, and because of its 3D capabilities, it could also be used as a makeshift mirror.

The TouchLight was a neat technology demonstration, and it was eventually licensed out for production to Eon Reality before the technology proved too expensive to be packaged into a consumer device. However, this wouldn't be Microsoft's only foray into fancy multitouch display technology.

2006: Multitouch sensing through "frustrated total internal reflection" Jeff Han

In 2006, Jeff Han gave the first public demonstration of his intuitive, interface-free, touch-driven computer screen at a TED Conference in Monterey, CA. In his presentation, Han moved and manipulated photos on a giant light box using only his fingertips. He flicked photos, stretched them out, and pinched them away, all with a captivating natural ease. "This is something Google should have in their lobby," he joked. The demo showed that a high-resolution, scalable touchscreen was possible to build without spending too much money.

A diagram of Jeff Han's multitouch sensing used FTIR.

Jeff Han

Han had discovered that the "robust" multitouch sensing was possible using "frustrated total internal reflection" (FTIR), a technique from the biometrics community used for fingerprint imaging. FTIRworks by shining light through a piece of acrylic or plexiglass. The light (infrared is commonly used) bounces back and forth between the top and bottom of the acrylic as it travels. When a finger touches down on the surface, the beams scatter around the edge where the finger is placed, hence the term "frustrated." The images that are generated look like white blobs and are picked up by an infrared camera. The computer analyzes where the finger is touching to mark its placement and assign a coordinate. The software can then analyze the coordinates to perform a certain task, like resize or rotate objects.

Jeff Han demonstrates his new "interface-free" touch-driven screen.

After the TED talk became a YouTube hit, Han went on to launch a startup called Perceptive Pixel. A year following the talk, he told Wired that his multitouch product did not have a name yet. And although he had some interested clients, Han said they were all "really high-end clients. Mostly defense."

Last year, Hann sold his company to Microsoft in an effort to make the technology more mainstream and affordable for consumers. "Our company has always been about productivity use cases," Han told AllThingsD. "That's why we have always focused on these larger displays. Office is what people think of when they think of productivity.

2008: Microsoft Surface Before there was a 10-inch tablet, the name "Surface" referred to Microsoft's high-end tabletop graphical touchscreen, originally built inside of an actual IKEA table with a hole cut into the top. Although it was demoed to the public in 2007, the idea originated back in 2001. Researchers at Redmond envisioned an interactive work surface that colleagues could use to manipulate objects back and forth. For many years, the work was hidden behind a non-disclosure agreement. It took 85 prototypes before Surface 1.0 was ready to go.

As Ars wrote in 2007, the Microsoft Surface was essentially a computer embedded into a medium-sized table, with a large, flat display on top. The screen's image was rear-projected onto the display surface from within the table, and the system sensed where the user touched the screen through cameras mounted inside the table looking upward toward the user. As fingers and hands interacted with what's on screen, the Surface's software tracked the touch points and triggered the correct actions. The Surface could recognize several touch points at a time, as well as objects with small "domino" stickers tacked on to them. Later in its development cycle, Surface also gained the ability to identify devices via RFID.

Bill Gates demonstrates the Microsoft Surface.

The original Surface was unveiled at the All Things D conference in 2007. Although many of its design concepts weren't new, it very effectively illustrated the real-world use case for touchscreens integrated into something the size of a coffee table. Microsoft then brought the 30-inch Surface to demo it at CES 2008, but the company explicitly said that it was targeting the "entertainment retail space." Surface was designed primarily for use by Microsoft's commercial customers to give consumers a taste of the hardware. The company partnered up with several big name hotel resorts, like Starwood and Harrah's Casino, to showcase the technology in their lobbies. Companies like AT&T used the Surface to showcase the latest handsets to consumers entering their brick and mortar retail locations.

Surface at CES 2008.

Rather than refer to it as a graphic user interface (GUI), Microsoft denoted the Surface's interface as a natural user interface , or "NUI." The phrase suggested that the technology would feel almost instinctive to the human end user, as natural as interacting with any sort of tangible object in the real world. The phrase also referred to the fact that the interface was driven primarily by the touch of the user rather than input devices. (Plus, NUI—"new-ey"—made for a snappy, marketing-friendly acronym.)

Microsoft introduces the Samsung SUR40.

In 2011, Microsoft partnered up with manufacturers like Samsung to produce sleeker, newer tabletop Surface hardware. For example, the Samsung SUR40 has a 40-inch 1080p LED, and it drastically reduced the amount of internal space required for the touch sensing mechanisms. At 22-inches thick, it was thinner than its predecessors, and the size reduction made it possible to mount the display on a wall rather than requiring a table to house the camera and sensors. It cost around $8,400 at the time of its launch and ran Windows 7 and Surface 2.0 software.

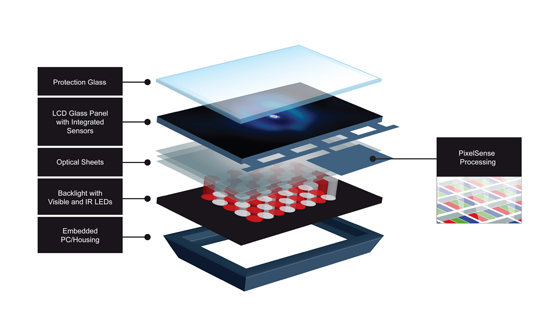

Microsoft

Last year, the company rebranded the technology as PixelSense once Microsoft introduced its unrelated Surface tablet to consumers. The name "PixelSense" refers to the way the technology actually works: a touch-sensitive protection glass is placed on top of an infrared backlight. As it hits the glass, the light is reflected back to integrated sensors, which convert that light into an electrical signal. That signal is referred to as a "value," and those values create a picture of what's on the display. The picture is then analyzed using image processing techniques, and that output is sent to the computer it's connected to.

PixelSense features four main components that make up its technology: it doesn't require a mouse and keyboard to work, more than one user can interact with it at one time, it can recognize certain objects placed on the glass, and it features multiple contact points. The name PixelSense could also be attributed to that last bit especially—each pixel can actually sense whether or not there was touch contact.

Although it would make an awesome living room addition, Microsoft continues to market the Surface hardware as a business tool rather than a consumer product.

Touch today—and tomorrow? It can't be understated—each of these technologies had a monumental impact on the gadgets we use today. Everything from our smartphones to laptop trackpads and WACOM tablets can be somehow connected to the many inventions, discoveries, and patents in the history of touchscreen technology. Android and iOS users should thank to E.A. Johnson for capacitive touch-capable smartphones, while restaurants may send their regards to Dr. G. Samuel Hurst for the resistive touchscreen on their Point of Sale (POS) system.

In the next part of our series, we'll dive deeper on the devices of today. (Just how has the work of FingerWorks impacted those iDevices anyway?) But history did not end with 2011, either. We'll also discuss how some of the current major players—like Apple and Samsung—continue contributing to the evolution of touchscreen gadgets. Don't scroll that finger, stay tuned!

(END) -- Nandakumar

No comments:

Post a Comment